Welcome to Dither AI

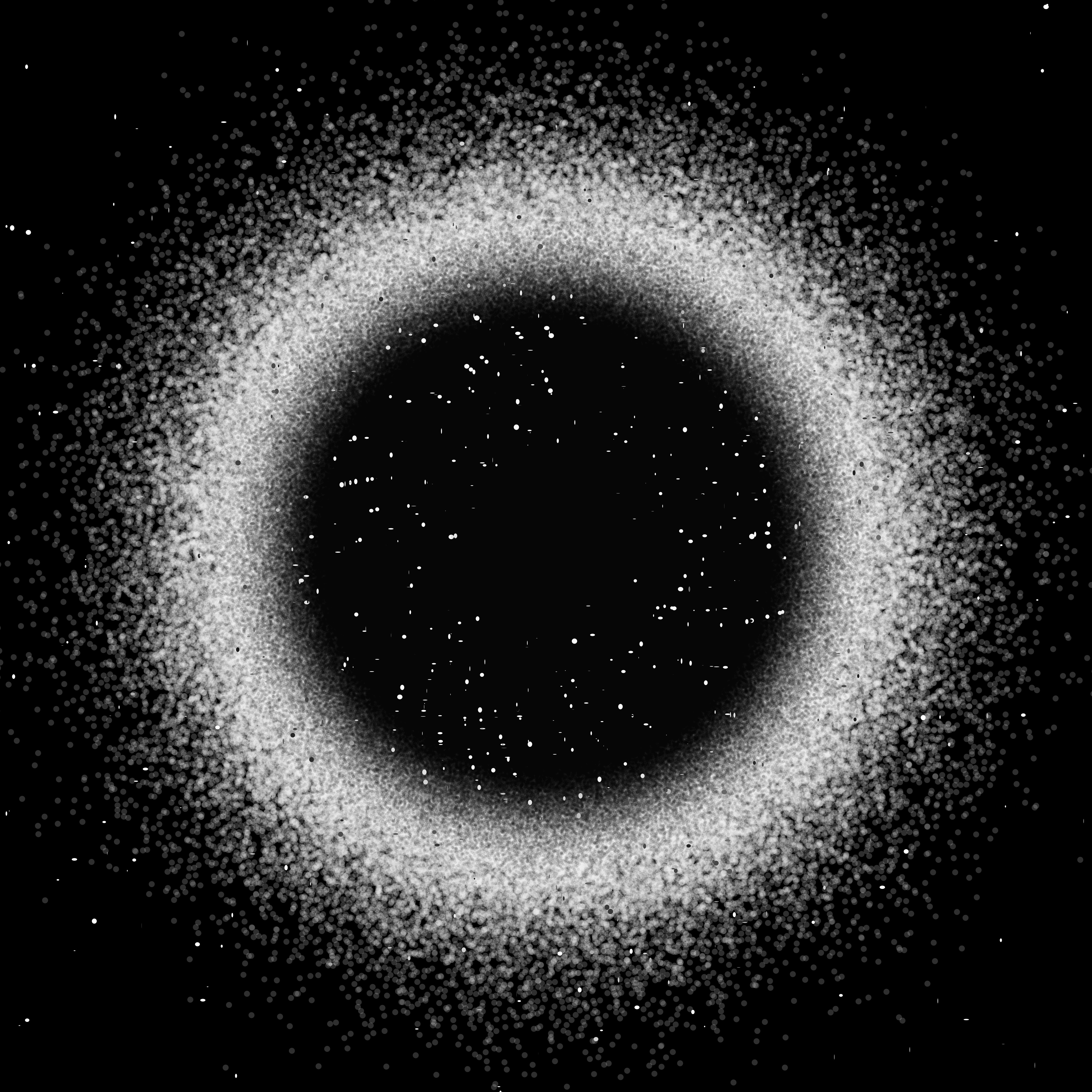

Welcome to Dither AI

Quick Access

🛠️ Tools & Demos

👥 Community

🎯 About & Vision

About Dither AI

Dither AI is building the transformer time-series modality for multimodal AI models.

Transformers, Modalities, Time-Series and Agents

Transformers have enabled an AI revolution by allowing researchers to drastically increase model size without the former model limitations. This design powers recent advances for AI products from companies like OpenAI, Anthropic, and Google. However, transformers require large amounts of high-quality data, which is a significant limitation.

Notice we did not say large language model (LLM) in the above paragraph. Language is a single modality of the multimodal products released by leading companies. A modality is a representation of data—images, text, audio, etc. You can train an LLM (text modality) or a vision model (image modality). You can also train a model on both text and images to get a multimodal model.

Time-series can be considered another modality. It has its own peculiarities because of the transformer architecture, making training difficult. However, we have ample evidence to believe that a time-series modality is both possible and could be competitive with traditional analysis techniques with a simpler interface. This potential is what we are pursuing, and we have already proven more effective than Google and Amazon in this domain (link).

We are at a turning point with AI agents and humans. Humans right now can benefit from AI technologies by increasing their output. Yet, we will slowly abstract that human input across a variety of fields. The AI that will take on the low-level human work are called agents. The definition is fuzzy, but it is undeniable they will need multiple modalities to interpret the world. They need to understand text, audio, and images.

These agents will need to understand how to make data-driven predictions based on real-world feedback—the time-series modality.

Now

Right now, time-series is the least researched modality. Dither AI is at the forefront. The time is now to develop this technology. We are implementing real tools to test the effectiveness of our models in real-world settings, designed for both humans and agents.

Solana

When we began in early 2024, publicly available time-series data was more scarce. We committed to Solana to leverage the rich data it provides. It also served as a test case. We knew that transformers required large amounts of data, and that is what Solana afforded.

Moonshots Wait For No One

If Dither AI is successful, it will expand beyond the current cryptocurrency paradigm. Imagine a model that does for data analytics what ChatGPT did for natural language. We aim to make AI-driven analysis and forecasting accessible to everyone.

We are taking a moonshot because making the world 1.00001x more efficient is a billion-dollar opportunity.