Alpha4U

Why are you still here? Go check out the greatest crypto algorithm this surface of the hollow earth.

Welcome to Dither AI

Welcome to Dither AI

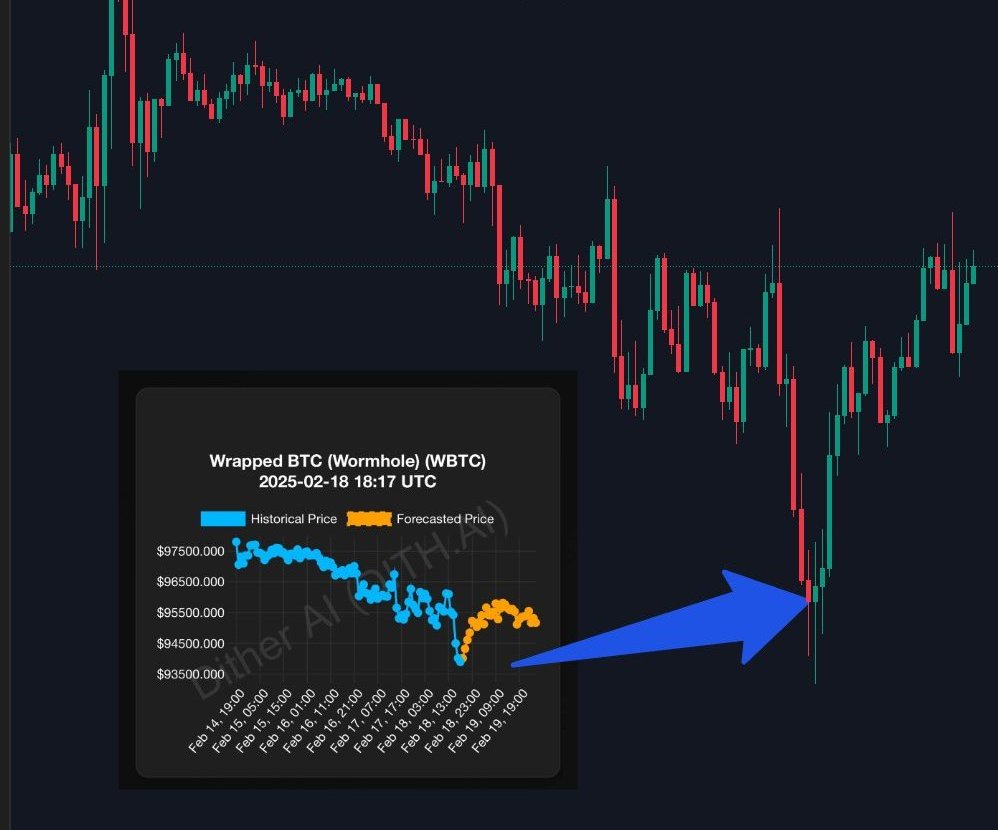

We present Alpha4U. It's the only AI on Solana that learns what you like and generates personalized alpha. Our AI adapts to your strategies and the market so that you never need to adjust a setting. It just works.

Why are you still here? Go check out the greatest crypto algorithm this surface of the hollow earth.

Seer is our premium offering. Stay on top of perps, markets, and market‑moving news. If it's capital allocation in crypto then Seer has you covered.

If you are looking for an official partnership or want to use our ground-breaking algorithm then please play with our web exhibition here.

Engage with other members in our exclusive Telegram Lounge for discussions and support.

Stay up to date with releases and announcements from Dither AI.

Follow the founder. He's okay.

If it's AI × Crypto then we have been there and done it. We were the first to start the AI reply guy meta. Before that we were the first of the AI tokens on Solana. Now we are kicking off the algorithmic revolution in crypto.

Dither AI is Artificial Intelligence without the marketing bullshit.